Any “blurry” pixels will effectively dissolve from one warp to the other, without ghosting - this is where the real power of this technique is! Lastly, we’re keymixing between two warps.Now you can draw a shape in the SplineWarp that should pretty-closely track with the plate, meaning you will only need minimal keyframes to warp things to where they need to be.Track specific features of the stunt double from a stabilized plate (using an inverse of the aforementioned Tracker), and plug that data into SplineWarps.

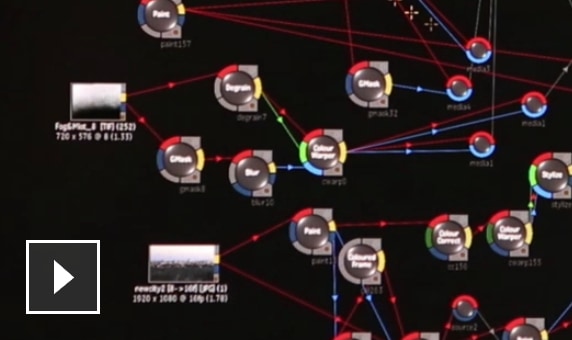

Track the general motion of the stunt double, and apply the data to an ST Map (STUNT_DOUBLE_TRACK1).Using the setup pictured below, we’re able to: As a real-world example, warping an actor’s head to stick to a stunt-double’s body requires a few different tracks & splinewarps.

SplineWarping, GridWarping, or any other type of warping in Nuke can be pretty tedious, especially when trying to do it all in one node. Visually, the results will be near-identical, although when doing a difference between the STMap node & any Lens Distortion node, there will be very minor differences due to filtering. Note: I recommend doing this conversion at the tech check stage, so the finalled version of your comp includes the STMap-based Lens Distortion. The node setup pictured above will then apply your lens distortion the same way as the proprietary node! To get around this, you can create a new ST Map, apply 3DEqualizer’s Lens Distortion node to it, then pre-render the distorted ST Map as a 32-bit EXR. This can be a problem when packaging a Nuke script to send to a stereo-conversion vendor, as proprietary node sharing is frowned upon. Some programs export ST/UV Maps, whereas other more-popular options such as 3DEqualizer have their own nodes to use in Nuke.

#AUTODESK FLAME EXPRESSIONS SOFTWARE#

Seemingly every camera tracking software on the market today has its own way of getting lens distortion data into Nuke. Now that we have the theory & basic setup out of the way, what practical applications does ST Mapping have in Nuke?

#AUTODESK FLAME EXPRESSIONS FULL#

Lastly, whenever you pre-render an STMap, be sure to render 32-bit EXRs to retain the full & correct information in your ST Map. on the Y-axis, the bottom-most pixel has a green value of 0 and the top-most pixel has a value of 1. On the X-axis, the left-most pixel has a red value of 0 and the right-most pixel has a value of 1. So what this means for Compositors using Nuke is, we use “ST maps” as we’re using them in relation to textures/images, not 3D geometry. Technically, UVs are used for texture coordinates (object space), and STs are used for surface coordinates (texture space). ST Maps (the next, next closest letters) are parametric coordinates that originated in earlier versions of Renderman. U & V were the next-closest letters that made sense, and hence were used… Although in CG terminology, usage of XYZ is reserved to denote an object’s position in 3D space. UV Maps refer to X and Y coordinates, so it would make sense to call them XY Maps. While there doesn’t seem to be a clear answer about the “why”, I did uncover some interesting knowledge about the “what”! Out of curiosity, I did a bit of Googling and asked a bunch of smart colleagues what they knew, although the answers I got were pretty vague. ST maps and UV maps look and function exactly the same way in Nuke, so it’s pretty difficult to discern the difference. What’s the difference between ST Maps and UV Maps, anyway?

0 kommentar(er)

0 kommentar(er)